The Immersion Lab (formerly Pervasive Lab) at the University of Stuttgart provides an open environment to explore cutting-edge immersive and pervasive technologies. The lab facilitates experimental evaluation of the concepts and prototypes developed in SimTech project network 7 (Adaptive Simulation and Interaction).

It offers a broad spectrum of media devices: different displays, including two sizeable transparent touch displays; virtual and augmented reality headsets; computing and networking equipment; motion and body tracking hardware; and, as of 2024, also a large-scale omnidirectional treadmill.

The lab works closely with the Neuromechanics Lab, sharing the space and hardware for investigating human movement, as well as with the remotely located Visualization Lab.

Ongoing Projects

Pervasive simulation envisions people making use of simulation technology anytime, anywhere. Contributing to this, the overarching goal of this project is to enable the visualization of complex biomechanical simulations directly on the body of a person observed in a virtual or augmented reality (VR/AR) setup. The focus of the first phase of project PerSiVal was to establish the necessary conceptual and methodological foundations. As a proof-of-concept a three-dimensional, continuum-mechanical upper-arm model was optimized using machine learning techniques, surrogate modeling, and distributing computations to different systems while keeping any loss of accuracy to a minimum. In the next phase of project PerSiVal we will build upon this and significantly widen our scope and increase the complexity of our work. Beyond simulating upper arm muscles and visualizing them on a real body, we will specifically utilize VR and haptic feedback technologies for an immersive "beyond reality" experience. Extended biomechanical models, model ensembles, and the live integration of experimental data, as well as concepts for a distributed and adaptive pervasive computing systems with multiple devices will contribute toward this.

https://www.simtech.uni-stuttgart.de/exc/research/pn/pn7/pn7-1-ii/

Hardware: SenseGloves, HoloLens 1/2, Azure Kinect, Optitrack, Ipad Pro

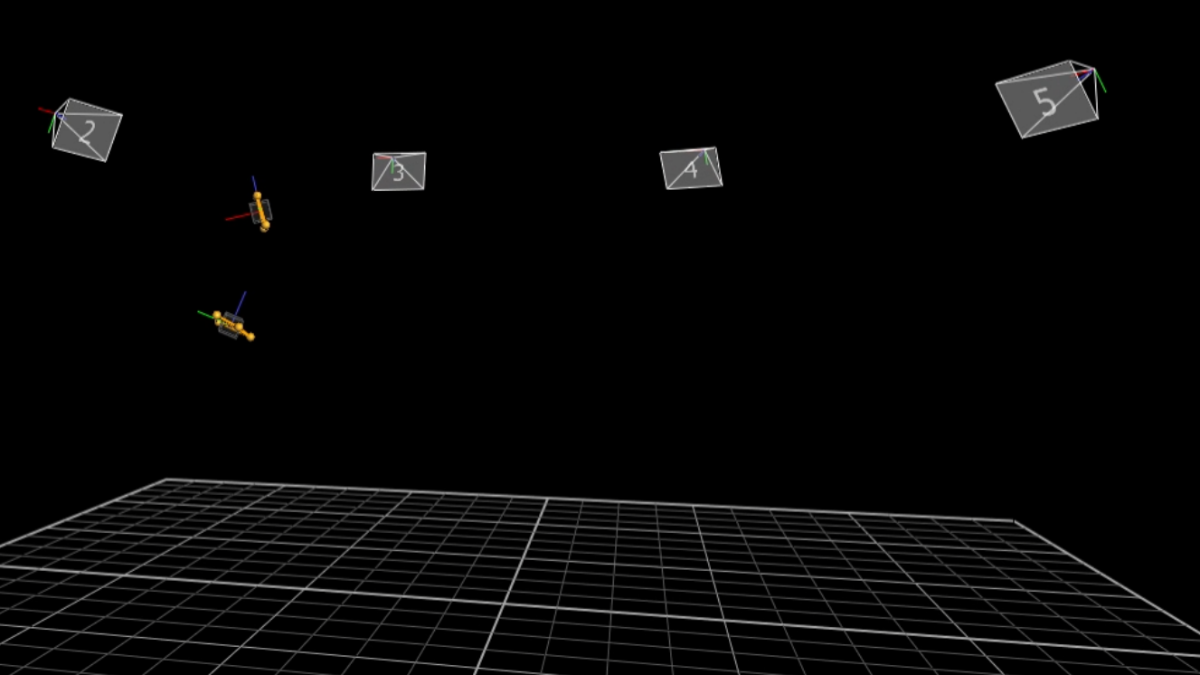

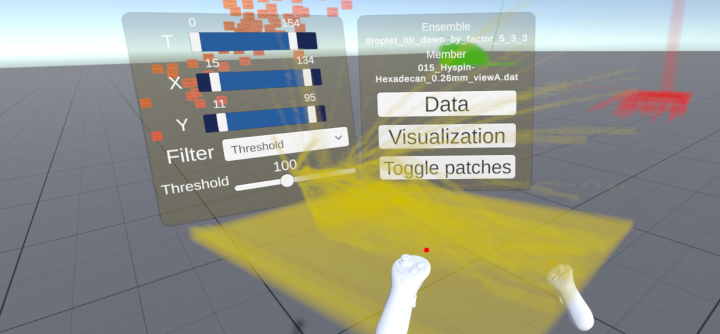

In this project, we explore how the immersive domain, especially virtual reality (VR), can aid the analysis and organization of complex and large spatiotemporal ensembles while utilizing increased virtual space and 3D perception.

The main goal is to develop a more natural approach to interacting with - and understanding of - spatio-temporal ensembles by improving the engagement with such data during analysis.

Different visualizations and immersive interaction techniques, such as a fully customizable space-time cube visualization, 3D subset selection, and ML-assisted querying of subsets in 3D, are currently being developed and evaluated.

https://www.simtech.uni-stuttgart.de/exc/research/pn/pn6/pn-6-8/

Hardware: Meta Quest 2

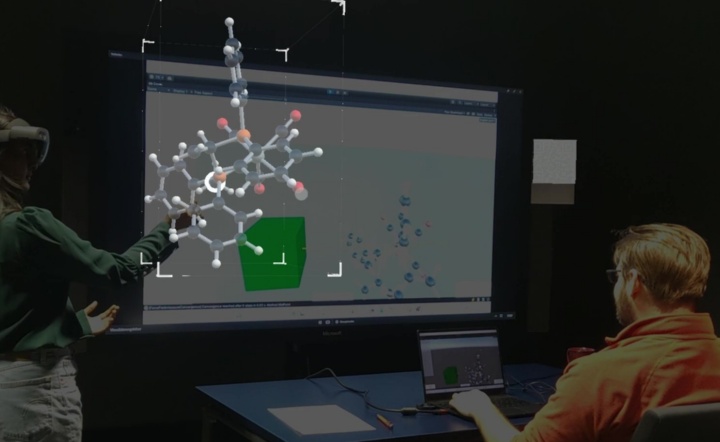

This project aims at porting the augmented reality (AR) and virtual reality (VR) techniques developed in this project network to a chemistry-based application.

In chemistry, three-dimensional models of molecules are central to understanding structure, properties, and reactivity. Compared to usual screen-based computer graphics, AR/VR setups have great potential for more natural inspection of complex topologies.

https://www.simtech.uni-stuttgart.de/exc/research/pn/pn7/pn7-8/

https://github.com/KoehnLab/chARpack

Hardware: Microsoft HoloLens2, Meta Quest 3

VR and AR have opened up more possibilities for motion guidance, with real-time tracking system enables the timely feedback of trainee's movements and 3D display technology empowers more viewing angles for better understanding. In this project, we aim to find the proper way to design XR-based motion guidance, such as viewing perspective, visual encoding of instructions, motion characteristics and corrective feedback.

Hardware: HTC VIVE Pro

Could transparent screens offer an alternative to HMDs in order to achieve ubiquitous immersive augmented reality? Large touch-enabled screens have become commercially available for digital signage. We want to explore how this technology could be used for displaying augmented reality content and interacting with both, the virtual content and real objects in the user’s environment. This involves tackling challenges like using touch-screen interaction for selecting far-away objects and rendering visually coherent virtual content. Our hardware setup for exploratory user studies includes optical marker tracking (VICON) and eye tracking (Pupil Invisible).

Past Projects

The overarching goal of this project is to enable the visualization of complex biomechanical simulations, specifically of a human arm, directly on the body of a person to be observed in a virtual or augmented reality (VR/AR) setup. In this project, we developed a 3D continuum-mechanical upper-arm model, optimized its performance using machine learning techniques and agent modeling, minimized the loss of accuracy through distributed processing, and finally rendered it on a mobile AR device together with interactive visualizations.

https://www.simtech.uni-stuttgart.de/exc/research/pn/pn7/pn7-1/

Hardware: SenseGloves, HoloLens 1/2, Azure Kinect, Optitrack, Ipad Pro

Small mobile devices such as fitness trackers, smartwatches, or mobile phones allow people to interact with real time visualizations of data collected from physiological sensors anywhere and anytime, for example, while sitting on the bus, while walking or running, at home or in the supermarket. This context differs from desktop usage and with the minimal rendering space for data representations, which is inherent to mobile devices such as smartwatches and fitness trackers, leads to interesting new challenges for visualizations. Therefore, the aim of this project is to study such small data visualizations---micro visualizations---on mobile devices while in motion.

https://www.simtech.uni-stuttgart.de/exc/research/pn/pn7/pn7a-1/

We explore micro visualizations on a smartwatch for pervasive simulation in the context of wrist kinematics. A smartwatch is used as a stand-alone device 1) to track the rotational movements of the wrist and 2) to provide visual and haptic feedback to the wearer to inform them about their progress with the performed exercise. We investigate multiple visualizations to assess their efficiency in assisting a person while performing wrist ROM (Range of Motion) exercises (see image, left). One of the limitations of viewing information on a moving device is the screen occlusion caused by the constant rotation of the wrist. To overcome this problem, we use a secondary device---a smartphone---to mirror the visualizations displayed on the smartwatch in real-time: 1) on the smartphone screen and 2) in augmented reality (see image, middle and right).

Hardware: Smartwatch: Fossil Carlyle HR Gen.5, Smartphone: Google Pixel 4a.

We evaluated people's ability to read a set of micro visualizations from a smartwatch (see image, right) while walking. We tested three different speeds controlled via a treadmill: slow walking speed (2km/h), fast walking speed (4km/h), and slow jogging speed (6km/h). We used an electric treadmill (see image, left), operating a range of speeds from 1 km/h to 16 km/h. Additionally, we tracked the wrist and head motion using a Vicon tracking system equipped with eight infrared cameras sampling at 120 Hz.

Hardware: Christopeit electric treadmill TM 2 Pro, Vicon System, Smartwatch: Fossil Carlyle HR Gen.5

MolecuSense is a virtual version of a physical molecule construction kit, based on visualization in Virtual Reality (VR) and interaction with force-feedback gloves. Targeting at chemistry education, our goal is to make virtual molecule structures more tangible. Results of an initial user study indicate that the VR molecular construction kit was positively received. Compared to a physical construction kit, the VR molecular construction kit is on the same level in terms of natural interaction. Besides, it fosters the typical digital advantages though, such as saving, exporting, and sharing of molecules. Feedback from the study participants has also revealed potential future avenues for tangible molecule visualizations.